LLM basic

Large Language Models (LLMs) are really good at understanding and creating human language. They work based on a structure called the transformer. There are three main types of LLMs:

- Mask Language Model: Guesses the missing words by looking at the words around them.

- Causal Language Model: Predicts the next word by looking at the words that came before it.

- Seq-to-Seq Model: Does tasks like translating languages or summarizing texts.

In this blog, we're going to talk a lot about LLMs. We'll cover how they're built, how they're trained and fine-tuned, how we measure how good they are, and what we use them for. We'll also touch on how they work when they're actually being used, and some cool things they can do. This blog is pretty advanced, so you should know a bit about machine learning and deep learning. But if you're ready to learn, grab a coffee and let's dive into the world of LLMs. I'm Rahul, and welcome to TechTalk Verse!

The journey of training a Large Language Model (LLM) typically involves three main phases:

1. Pretraining

2. Fine-tuning with Instruction

3. Reinforcement Learning with Human Feedback (RLHF)

In this article, I'll provide a broad overview of the training process for an LLM across these stages.

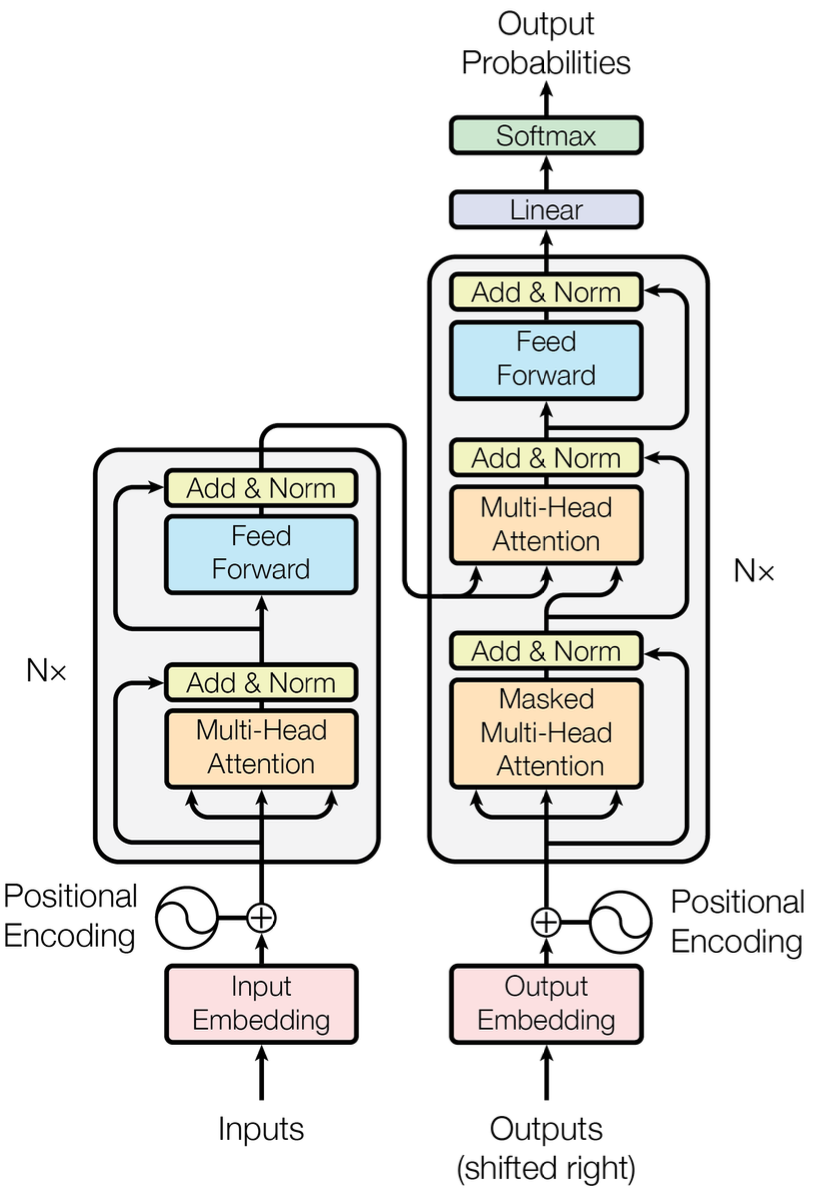

Transformers

The model architecture for transformers, known as encoder-decoder, plays a crucial role in processing input sentences. Let's take a simplified tour through its various layers:

1. Tokenization: The input sentence gets broken down into token IDs using Byte Pair Encoding (BPE), forming a sequence of word representations.

2. Embedding: These token IDs are then converted into embeddings through an embedding layer, capturing their semantic meanings.

3. Positional Encoding: To retain the positional information of tokens in the sequence, positional embeddings are added to the token embeddings. This ensures that the model understands the order of words.

4. Encoder Layers: The encoder comprises six identical layers, each containing multi-head attention layers and fully connected feed-forward layers. Residual connections, combined with normalization layers, enhance the flow of information through these layers.

5. Attention Mechanism: Within the encoder, attention layers calculate weighted sums of values based on queries and keys. Transformers commonly employ dot-product attention, ensuring effective attention calculation across the sequence.

6. Decoder Layers: Similarly, the decoder consists of six identical layers, with each layer containing Masked Multi-Head Attention, Multi-Head Attention, and fully connected feed-forward layers.

7. Output Generation: In the decoder, token embeddings undergo a shift by one position to ensure that predictions for a given position depend solely on previously generated tokens. This is facilitated by the Masked Multi-Head Attention layer.

8. Final Output: The output from the Masked Multi-Head Attention layer and the output from the encoder are combined and passed through the Multi-Head Attention layer in the decoder to generate the final output sequence.

For a deeper understanding of attention mechanisms, one can explore resources like "The Attention Mechanism From Scratch."

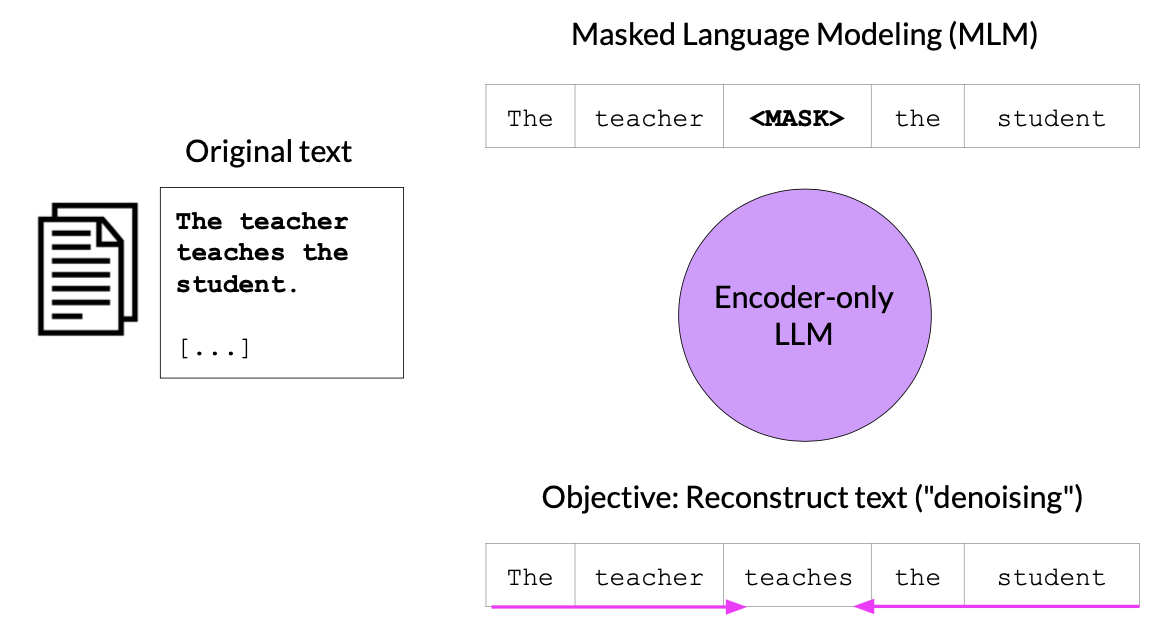

Encoder

Encoder models, also known as Autoencoding Models, are a type of language model used in natural language processing tasks. One common training method for these models is called Mask Language Modeling (MLM).

Here's how MLM works:

1. Pretraining Phase: The model is trained on a large amount of text data. During this training, some words in the input text are randomly hidden or "masked".

2. Objective: The model's goal is to predict the masked words based on the context provided by the surrounding words. This process helps the model understand the relationships between words in a sentence.

3. Benefits: By learning to predict masked words, the model gains a deeper understanding of language. These learned representations can then be fine-tuned for specific tasks.

For example:

- Input: "The teacher [MASK] the student."

- Output: "The teacher teaches the student."

Use Cases: Encoder models trained using MLM can be applied to various tasks such as sentiment analysis, named entity classification, and more.

Examples of Encoder Models: Some well-known encoder models include BERT, RoBERTa, and others. These models have been pretrained using MLM and can be fine-tuned for specific tasks to achieve high performance.

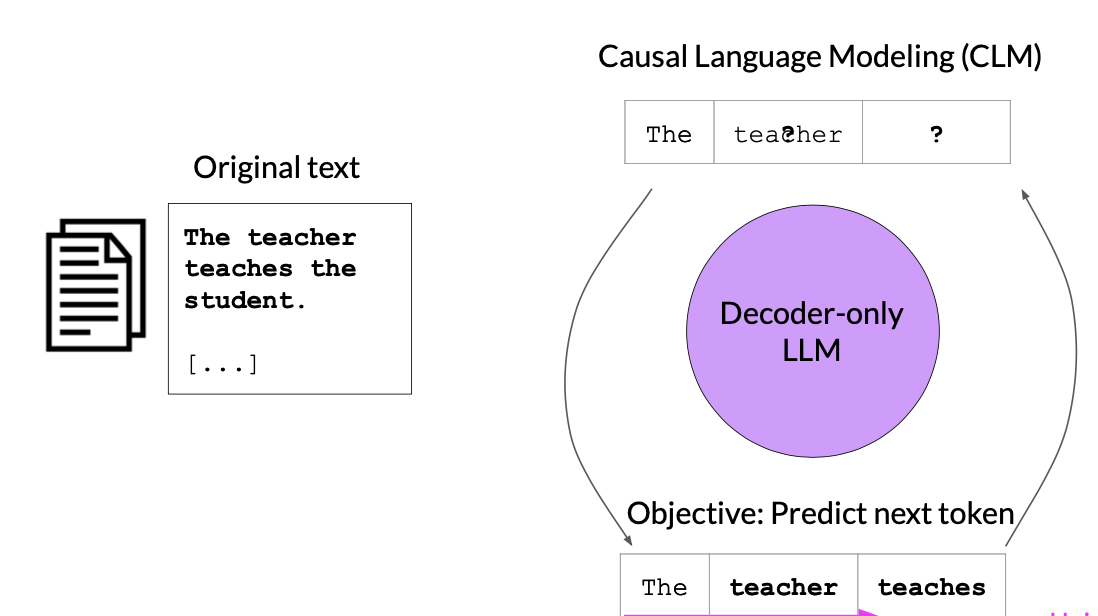

Decoder

Decoder models, also known as Autoregressive models, are another type of language model commonly used in natural language processing. These models are trained using a method called Causal Language Modeling (CLM).

Here's a breakdown of CLM:

1. Pretraining Phase: Just like with encoder models, the model is trained on a large corpus of text data. However, in CLM, the focus is on predicting the next word in a sequence given the preceding words.

2. Objective: The model learns to understand the causal relationship between words in a sentence. It predicts the next word based on the words that came before it, enabling it to generate text in an autoregressive manner.

3. Benefits: By mastering the task of predicting the next word, the model develops a strong understanding of language structure and coherence. This understanding can then be leveraged for various NLP tasks.

For example:

- Input: "The teacher ____"

- Output: "The teacher teaches"

Use Cases: Decoder models trained using CLM are particularly useful for tasks like text generation. They can generate coherent and contextually relevant text based on a given prompt.

Examples of Decoder Models: Some prominent examples of decoder models include GPT (Generative Pretrained Transformer), BLOOM, and others. These models excel at generating text that is fluent and coherent, making them valuable tools for a wide range of applications in natural language processing.

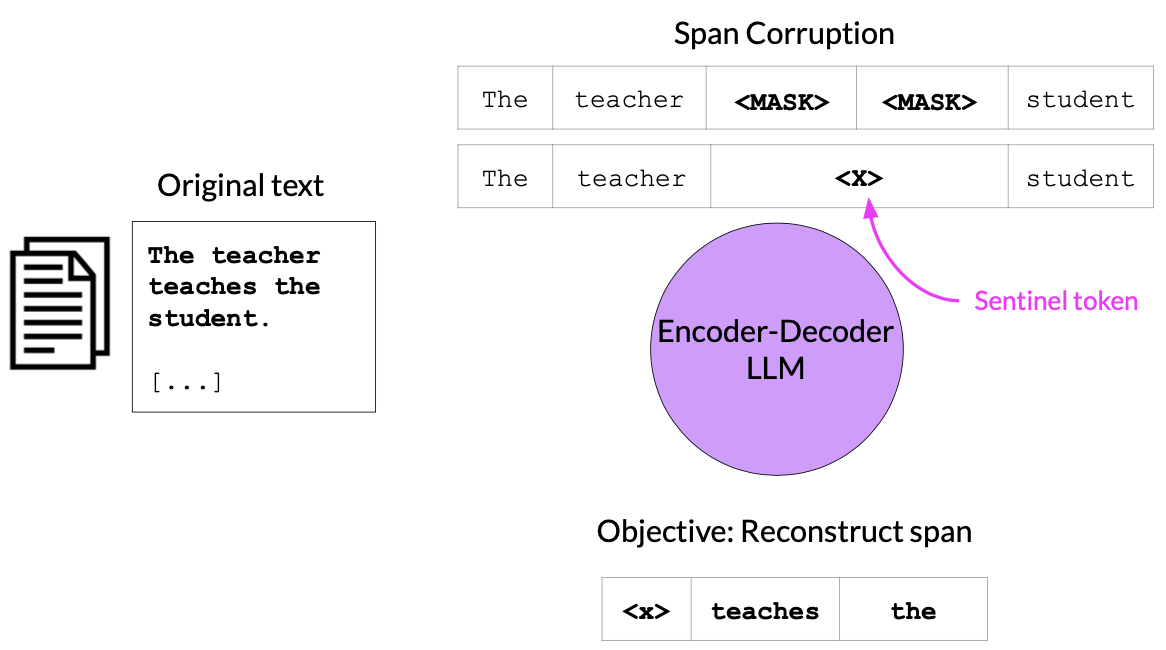

Encoder-Decoder Models

Encoder-Decoder Models, also known as sequence-to-sequence (Seq2Seq) models, are a type of architecture commonly used in natural language processing tasks.

Here's a breakdown of their functionality:

1. Nature: Seq2Seq models are designed to handle sequences of data, making them well-suited for tasks where input and output are sequences of varying lengths.

2. Training Approach: These models are typically trained using supervised learning techniques. This means they learn from paired input-output sequences, where each input is associated with a corresponding output.

3. Task Description: In Seq2Seq tasks, such as machine translation, the model is trained to generate an output sequence (e.g., translated text) based on an input sequence (e.g., original text).

For instance:

- Input Sequence: "Bonjour"

- Output Sequence: "Hello"

Use Cases: Encoder-Decoder models find application in a wide range of tasks including translation, text summarization, question answering, and more. They excel in scenarios where understanding and generating sequences of text are essential.

Examples of Encoder-Decoder Models: Some notable examples of Seq2Seq models include T5, BART, and others. These models have demonstrated exceptional performance across various NLP tasks, showcasing the versatility and effectiveness of the Seq2Seq architecture.

Pretraining

During the pretraining phase, the model undergoes training on a substantial amount of unstructured textual data in a self-supervised manner. However, a significant challenge in this phase lies in the computational cost involved.

Let's delve into the calculation of GPU RAM required to store and train a model with 1 billion parameters:

-

GPU RAM for Storing Parameters:

- Each parameter occupies 4 bytes (32-bit float).

- Therefore, for 1 billion parameters: 4 bytes×109=4 GB4 bytes×109=4 GB.

-

Memory Required for Training:

- In addition to storing parameters, memory is needed for gradients, optimizer states (e.g., ADAM), activations, and temporary memory.

- Considering these factors, we estimate an additional 20 bytes per parameter.

- Total memory required for training: 4 bytes+20 extra bytes=24 bytes4 bytes+20 extra bytes=24 bytes per parameter.

Based on this calculation, the memory required for training is approximately 20 times greater than that needed for storing the model.

Therefore:

- Memory needed to store a 1 billion parameter model: 4GB (at 32-bit full precision).

- Memory needed to train a 1 billion parameter model: 80GB (at 32-bit full precision).

Here's a Python function to calculate the memory required for training a model with a given number of parameters:

def calculate_training_memory(num_parameters):

# Each parameter requires 4 bytes

parameter_memory = num_parameters * 4

# Additional memory needed for training

training_memory = parameter_memory + (20 * num_parameters)

return training_memory

# Example calculation for 1 billion parameters

num_params = 10**9

required_memory = calculate_training_memory(num_params)

print(f"Memory needed to train a {num_params} parameter model: {required_memory} bytes")

This function takes the number of parameters as input and returns the total memory required for training the model.

Quantization

Quantization offers a way to reduce memory usage by converting numbers from a higher precision format, such as 32-bit floating point, to lower precision formats like 16-bit floating point or 8-bit integers. Let's understand the different datatypes used for quantized models and their corresponding memory requirements:

-

FP32 (32-bit floating point): This is the standard floating-point format with 32 bits allocated for each number.

-

FP16 (16-bit floating point): Using half the number of bits compared to FP32, this format reduces memory usage while maintaining a certain level of precision.

-

BFLOAT16 (16-bit floating point half precision): BFLOAT16 is a truncated version of FP32, with the same number of bits as FP16 but a different representation. It's commonly used for pretraining large language models like FLAN T5.

-

INT8 (8-bit integers): Representing numbers as 8-bit integers further reduces memory usage, albeit with a loss of precision compared to floating-point formats.

Now, let's calculate the RAM requirements for storing a model with 1 billion parameters using different precision formats:

- Full Precision Model: Requires 4GB of RAM at 32-bit full precision.

- 16-bit Quantized Model: With a reduction in precision to 16-bit half precision, the RAM requirement decreases to 2GB.

- 8-bit Quantized Model: Further reducing precision to 8-bit, the RAM requirement reduces to 1GB.

Need and Advantage: The need for quantization arises from the desire to reduce memory usage without sacrificing model performance significantly. By using lower precision formats, we can store and process models more efficiently, leading to reduced hardware costs and improved performance in scenarios where memory is limited.

Here's a simple mathematical representation of the memory reduction achieved through quantization:

# Calculate memory reduction from FP32 to 16-bit quantized model

fp32_memory = 4 # GB

fp16_memory = 2 # GB

memory_reduction_fp16 = (fp32_memory - fp16_memory) / fp32_memory * 100

print(f"Memory reduction from FP32 to 16-bit quantized model: {memory_reduction_fp16}%")

# Calculate memory reduction from FP32 to 8-bit quantized model

int8_memory = 1 # GB

memory_reduction_int8 = (fp32_memory - int8_memory) / fp32_memory * 100

print(f"Memory reduction from FP32 to 8-bit quantized model: {memory_reduction_int8}%")

These calculations demonstrate the percentage reduction in memory usage achieved by quantizing the model to 16-bit and 8-bit formats compared to the original FP32 format.

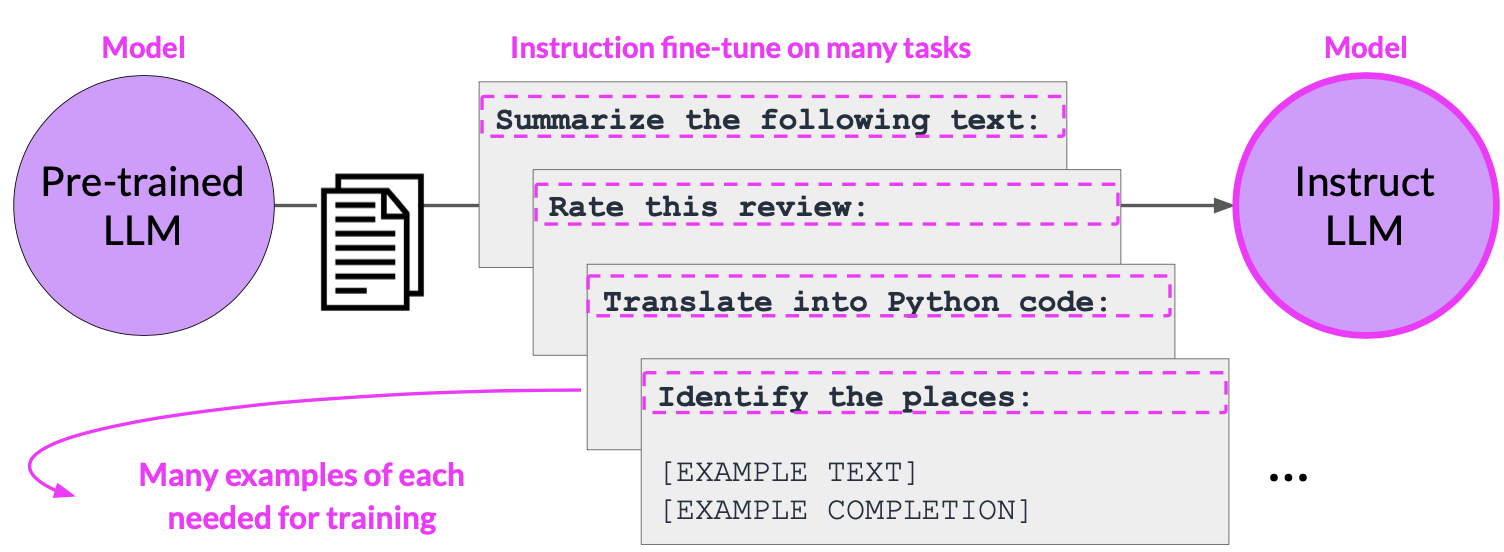

Fine-tuning

Fine-tuning in Large Language Models (LLMs) typically involves a process known as instruction fine-tuning. This approach extends traditional fine-tuning by providing high-level instructions or demonstrations to guide the model's behavior more precisely. Instead of merely adjusting the model's parameters based on labeled data, instruction fine-tuning leverages a set of labeled examples, usually in the form of {prompt, response} pairs. These pairs instruct the model on how to generate an appropriate response given a specific prompt or input.

Now, let's explore how fine-tuning works and its distinctions from pre-training:

1. Fine-tuning Process:

- During fine-tuning, the pre-trained LLM is further trained on a specific task or set of tasks using labeled examples.

- The model adjusts its parameters to better align with the new task's requirements, refining its ability to generate accurate responses based on the provided instructions.

2. Pre-training vs. Fine-tuning:

- Pre-training involves training an LLM on a large corpus of unlabeled text data using self-supervised learning techniques like mask language modeling (MLM) or causal language modeling (CLM).

- Fine-tuning, on the other hand, occurs after pre-training and involves training the model on labeled data for specific tasks. This process fine-tunes the model's parameters to adapt it to the nuances of the target task.

3. Catastrophic Forgetting:

- One challenge in fine-tuning is catastrophic forgetting, where the model loses previously learned information while adapting to new tasks.

- To mitigate catastrophic forgetting, several strategies can be employed:

- Fine-tuning on multiple tasks simultaneously helps the model retain knowledge across different domains.

- Parameter Efficient Fine-tuning (PEFT) techniques aim to fine-tune the model efficiently with minimal impact on previously learned knowledge.

Use Cases:

Fine-tuning LLMs enables them to excel in various NLP tasks, including sentiment analysis, text summarization, question answering, and more. For example, a pre-trained LLM like BERT can be fine-tuned on a sentiment analysis dataset, where the model learns to classify text based on its sentiment (positive, negative, neutral). Similarly, in question answering tasks, the model can be fine-tuned on a dataset containing question-answer pairs, enabling it to accurately respond to user queries.

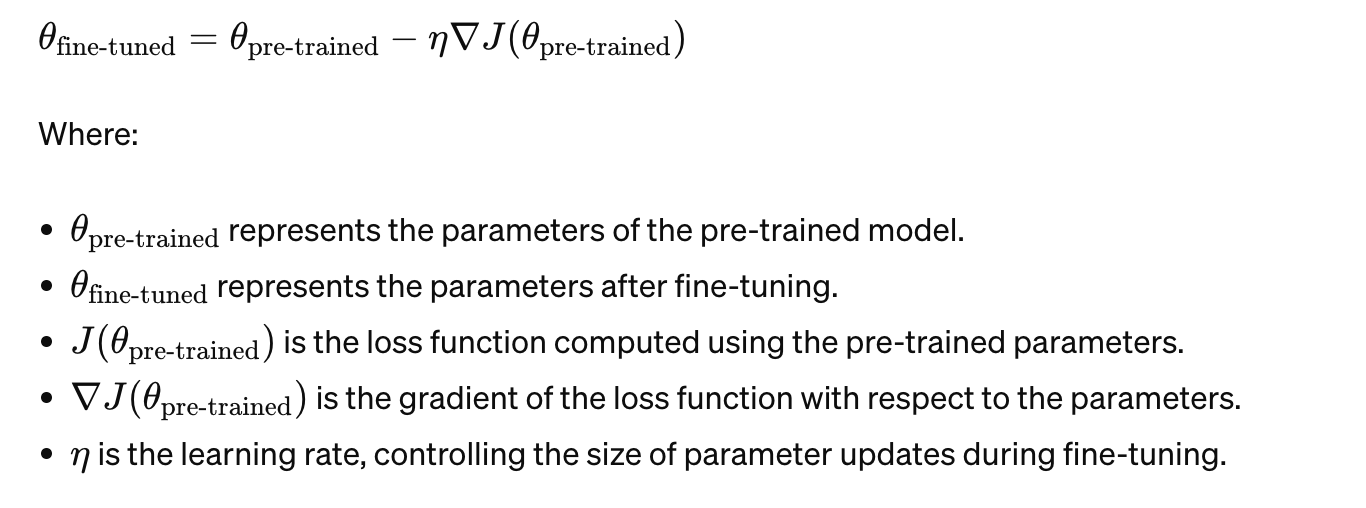

Mathematics of Fine-tuning:

Fine-tuning involves updating the model's parameters using gradient descent optimization based on a task-specific loss function. Mathematically, the fine-tuning process aims to minimize the discrepancy between the model's predictions and the ground truth labels provided in the labeled dataset.

Here's a simplified illustration of fine-tuning with mathematics:

This equation describes how the model's parameters are updated during fine-tuning to minimize the loss function and improve performance on the target task.

PEFT

Parameter Efficient Fine-tuning (PEFT) is a technique aimed at updating only a small subset of parameters in a model during the fine-tuning process. This approach addresses two key issues: catastrophic forgetting and computational cost.

Need and Problem Resolution:

- Catastrophic Forgetting: When fine-tuning a model on a new task, there's a risk of the model forgetting previously learned information, leading to decreased performance on earlier tasks. PEFT helps mitigate this issue by focusing updates on specific parameters relevant to the new task, thereby preserving existing knowledge.

- Computational Cost: Full-scale fine-tuning, where all model parameters are updated, can be computationally expensive, especially for large models. By updating only a subset of parameters, PEFT reduces the computational burden, making fine-tuning more efficient.

Here's a breakdown of some PEFT methods:

-

Selective: This method involves selecting a subset of initial Large Language Model (LLM) parameters to fine-tune. By targeting specific parameters, selective fine-tuning allows for more focused updates, reducing the risk of catastrophic forgetting.

-

Reparameterization: Reparameterization techniques involve transforming model weights using a low-rank representation. For example, methods like LoRA (Low-Rank Adaptation) reparameterize weights to reduce the number of parameters while preserving model performance.

-

Additive: Additive methods involve introducing additional trainable layers or parameters to the model during fine-tuning. Examples include Adapter modules and Soft prompts, which add lightweight components to the model architecture without significantly increasing computational overhead.

LoRA

Low Rank Adaptation (LoRA) stands out as a Parameter Efficient Fine-tuning (PEFT) method, redefining model weights through a low-rank representation. Here's a concise breakdown of fine-tuning with LoRA, along with an illustrative example using a base Transformer model:

Fine-tuning with LoRA:

1. Freeze Original Weights: The majority of the original Large Language Model (LLM) weights are kept frozen to preserve their learned knowledge.

2. Inject Rank Decomposition Matrices: Two rank decomposition matrices are introduced into the model architecture.

3. Train Smaller Matrices: The weights of the smaller rank decomposition matrices are then trained to adapt the model to the target task.

Inference Update Steps:

1. Matrix Multiplication: Multiply the low-rank matrices. These matrices (denoted as 'a' and 'b') are shaped to match the dimensions of the original weight matrices.

2. Add to Original Weights: Add the resulting matrices to the original weights to update the model for inference.

Concrete Example with Base Transformer:

Consider the base Transformer model introduced by Vaswani et al. in 2017:

- Transformer weights have dimensions d * k = 512 * 64 , totaling 32,768 trainable parameters.

- With LoRA and a rank r = 8 :

- Matrix A has dimensions r * k = 8 \times 64, resulting in 512 parameters.

- Matrix B has dimensions d * r = 512 * 8 , leading to 4,096 trainable parameters.

Additional Example:

- Let's say we have another base Transformer model with dimensions 256 * 32

- With LoRA and the same rank r = 8 :

- Matrix A would have 8 * 32 = 256 parameters.

- Matrix B would have 256 * 8 = 2048 trainable parameters.

- This illustrates the versatility of LoRA across different model architectures, enabling significant parameter reduction while maintaining performance.

By adopting LoRA, the model achieves an impressive 86% reduction in parameters to train, demonstrating its efficiency in optimizing model performance while minimizing computational costs.

RLHF

RLHF, or Reinforcement Learning from Human Feedback, plays a crucial role in transforming an Instruction Fine-tuned Large Language Model (LLM) into a human-aligned LLM. Let's delve into the details of how RLHF operates and its objectives:

Agent: In the context of RLHF, the agent is represented by the Instruction Fine-tuned LLM. This model has been fine-tuned using high-level instructions or demonstrations to guide its behavior.

Action: The action taken by the agent corresponds to the generation of the next token in a sequence of text. Given a prompt or input, the model generates the subsequent tokens to form a coherent response.

Action Space: The action space encompasses the vocabulary of all tokens available to the model. Each token represents a potential action that the agent can take during text generation.

Goals of Instruction Fine-tuning:

- Enhancing understanding of prompts: By fine-tuning on labeled examples, the model aims to better comprehend the context and requirements of specific tasks.

- Improving task completion: The primary objective is to enhance the model's ability to generate accurate and relevant responses based on the provided instructions.

- Achieving natural language generation: Fine-tuning seeks to refine the model's language generation capabilities, ensuring that the output sounds more human-like and coherent.

Goals of RLHF:

- Maximizing helpfulness and relevance: RLHF aims to maximize the utility of the model's responses by ensuring they are helpful and pertinent to the given context.

- Minimizing harm: RLHF seeks to minimize the potential negative impact of the model's outputs, such as generating inappropriate or harmful content.

- Avoiding dangerous topics: To promote safety and ethical use, RLHF helps steer the model away from generating responses related to sensitive or harmful topics.

In summary, RLHF guides the Instruction Fine-tuned LLM towards generating responses that are not only accurate and contextually relevant but also safe, ethical, and aligned with human expectations. This reinforcement learning approach ensures that the model's outputs are beneficial and considerate of societal norms and values.

Model Evaluation Metrics

Evaluation Metrics:

ROUGE:

ROUGE, or Recall-Oriented Understudy for Gisting Evaluation, is a metric used to assess the quality of text summarization. It measures the overlap between the generated summary and the reference summary in terms of unigrams and bigrams.

- ROUGE-1 Recall: Measures the proportion of unigrams in the reference summary that are also present in the generated summary.

- ROUGE-1 Precision: Measures the proportion of unigrams in the generated summary that are also present in the reference summary.

- ROUGE-2 Recall: Measures the proportion of bigrams in the reference summary that are also present in the generated summary.

- ROUGE-2 Precision: Measures the proportion of bigrams in the generated summary that are also present in the reference summary.

- LCS-L Recall: Measures the longest common subsequence between the generated and reference summaries in terms of unigrams.

- LCS-L Precision: Measures the longest common subsequence between the generated and reference summaries in terms of unigrams.

BLEU:

BLEU, or Bilingual Evaluation Understudy, is a metric used to evaluate the quality of text translation. It computes the precision of n-grams (sequences of words) in the generated translation compared to the reference translation, averaging across different n-gram sizes.

Perplexity:

Perplexity is a metric commonly used for autoregressive or causal language models. It quantifies how well a language model predicts a sequence of tokens. Perplexity is calculated as the exponentiated average negative log-likelihood of a sequence, or equivalently, the exponentiation of the cross-entropy between the data and model predictions. Note that perplexity is not well-defined for masked language models like BERT.

Evaluation Benchmarks

GLUE: GLUE, or General Language Understanding Evaluation, is a versatile benchmark platform designed to assess the performance of natural language understanding systems across various tasks. These tasks span single-sentence tasks like sentiment analysis and acceptability judgment, similarity and paraphrase tasks, as well as inference tasks such as natural language inference (NLI) and question answering (QA).

SuperGLUE: SuperGLUE builds upon GLUE as a more challenging benchmark for evaluating general-purpose language understanding systems. It includes tasks like question answering, natural language inference, word sense disambiguation (WSD), and others, which require deeper understanding and reasoning capabilities.

HELM: HELM, or Holistic Evaluation of Language Models, provides a comprehensive evaluation of language models using datasets such as OpenBookQA, TruthfulQA, IMDB, and RAFT. It assesses models based on metrics like accuracy, calibration, robustness, fairness, bias, toxicity, and efficiency, aiming to provide a holistic understanding of their performance.

MMLU: MMLU, or Massive Multitask Language Understanding, focuses on evaluating language understanding models designed for large-scale tasks. These benchmarks are tailored to assess the performance of massive models across a wide range of tasks, reflecting the increasing complexity and scale of modern language understanding systems.

References

https://techtalkverse.com/post/artificial-intelligence/llm-basics/

#LLMs #NLP #Pretraining #FineTuning #PEFT #RLHF #EvaluationMetrics #ROUGE #BLEU #GLUEBenchmark #SuperGLUEBenchmark #HELM #MMLU #TextSummarization #QuestionAnswering #TransformerArchitecture #MachineLearning #DeepLearning #NeuralNetworks #NaturalLanguageUnderstanding #NaturalLanguageGeneration #NLU #NLG #AutoregressiveModels #LanguageModels